R语言是一款非常优秀的数据挖掘工具,拥有顶尖的数据处理、数据挖掘课数据可视化。是数据从业者必备的一把利器。但是其基于内存的诟病也一直被人所嫌弃,虽然这几年很多优秀的扩展包极大提升了R语言的性能,但是在面对企业级大数据挖掘面前,也会显得力不从心。

现在我们也不用担心R语言这个问题了,自从微软收购了商业版R以后,就进行了很多的整合和优化,之前只面向高校学生免费试用,现在,我们企业界的数据从业者也可以免费下载Microsoft R Server ,利用MRS处理大数据,MRS对开源R100%兼容,能充分利用CRAN 现有的10000+扩展包,实现不同数据挖掘需求。

关于Microsoft R Server的安装,陈堰平老师已经写了一篇非常详细的文档,感兴趣者可以点击,查看文档进行安装。

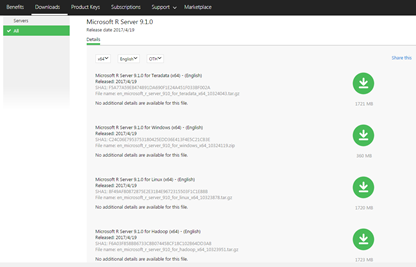

注意:请确保最后你都进入页面,选择最新的Microsoft R Server 9.10版本下载。贴心的微软为我们提供了不同系统的安装版本,如下图所示:

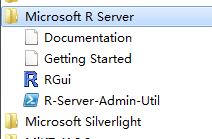

我们按照堰平老师的安装步骤安装成功后,会在你的计算机出现:

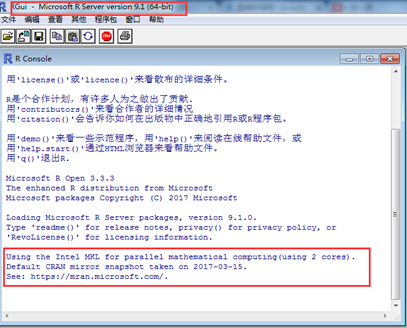

说明MRS已经在你计算机安装成功。我们点击RGui,可以出现类似R相似界面:

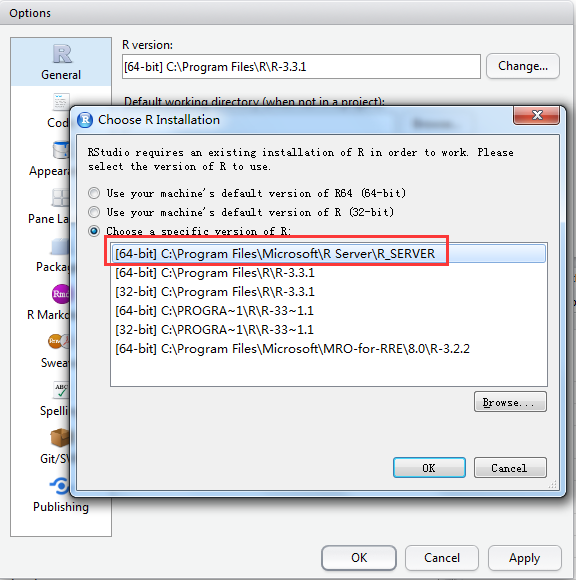

我们也可以利用RStudio来调用MRS。操作如下:

选择MRS即可。

好了,既然我们安装好了MRS,那么接下来就用一个简单的案例来帮助大家快速上手。

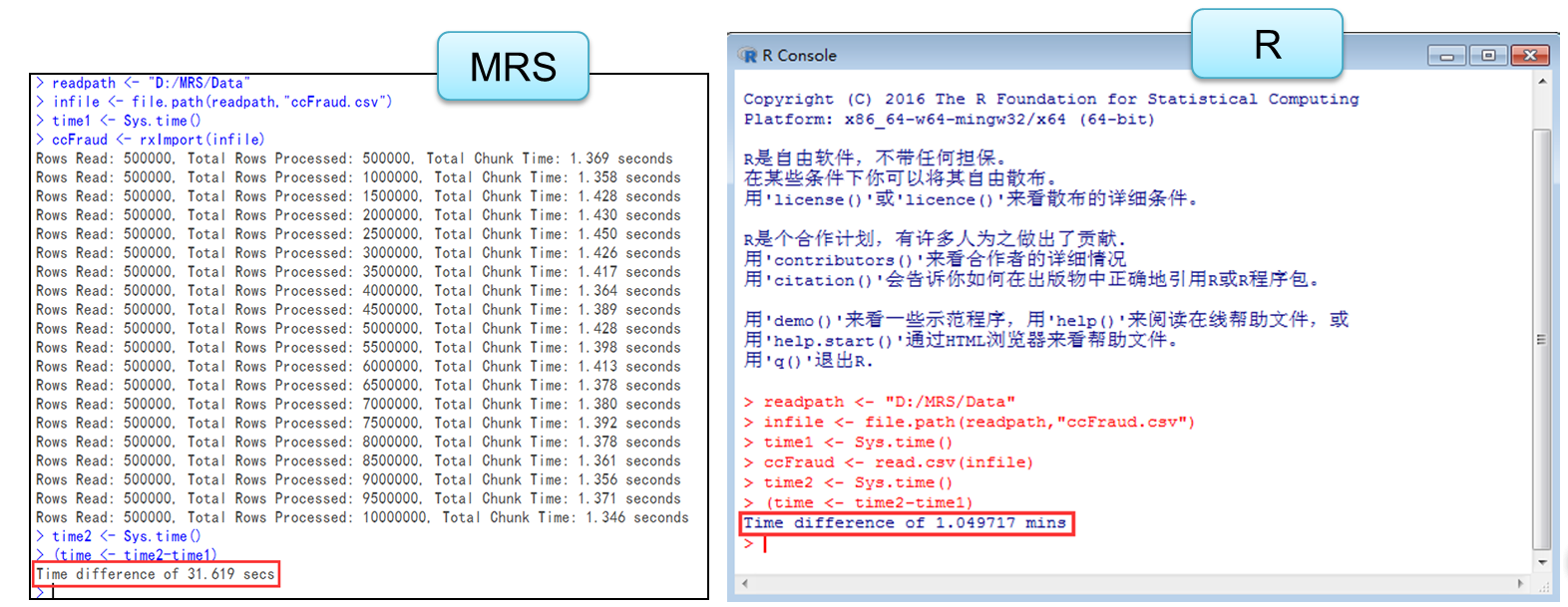

如果数据集不大,可以直接导入到R或MRS中再进行处理(MRS速度优于R)。我们导入ccFraud.csv数据集,有一千万条记录。对比MRS和R导入数据的时间如下:

可见,利用MRS导数据的时间花了31秒,利用R导数据的时间花了1分钟,速度差不多提高了一倍。

如果是大数据集,MRS也提供了将数据集先保存为.xdf格式(在硬盘中),该数据对象可供大多数RevoScaleR包中的函数使用(数据处理、数据转换、数据建模等)。我们可以利用rxImport函数实现,将其outFile参数设置为你要保存的文件名即可。

> # 导入csv数据集 > readpath <- "D:/MRS/Data" > infile <- file.path(readpath,"ccFraud.csv") > ccFraud_xdf <- rxImport(inData = infile, + outFile = "ccFraud.xdf", + overwrite = TRUE) Rows Read: 500000, Total Rows Processed: 500000, Total Chunk Time: 1.393 seconds Rows Read: 500000, Total Rows Processed: 1000000, Total Chunk Time: 1.440 seconds Rows Read: 500000, Total Rows Processed: 1500000, Total Chunk Time: 1.462 seconds Rows Read: 500000, Total Rows Processed: 2000000, Total Chunk Time: 1.462 seconds Rows Read: 500000, Total Rows Processed: 2500000, Total Chunk Time: 1.475 seconds Rows Read: 500000, Total Rows Processed: 3000000, Total Chunk Time: 1.408 seconds Rows Read: 500000, Total Rows Processed: 3500000, Total Chunk Time: 1.454 seconds Rows Read: 500000, Total Rows Processed: 4000000, Total Chunk Time: 1.381 seconds Rows Read: 500000, Total Rows Processed: 4500000, Total Chunk Time: 1.417 seconds Rows Read: 500000, Total Rows Processed: 5000000, Total Chunk Time: 1.429 seconds Rows Read: 500000, Total Rows Processed: 5500000, Total Chunk Time: 1.440 seconds Rows Read: 500000, Total Rows Processed: 6000000, Total Chunk Time: 1.425 seconds Rows Read: 500000, Total Rows Processed: 6500000, Total Chunk Time: 1.452 seconds Rows Read: 500000, Total Rows Processed: 7000000, Total Chunk Time: 1.456 seconds Rows Read: 500000, Total Rows Processed: 7500000, Total Chunk Time: 1.406 seconds Rows Read: 500000, Total Rows Processed: 8000000, Total Chunk Time: 1.379 seconds Rows Read: 500000, Total Rows Processed: 8500000, Total Chunk Time: 1.434 seconds Rows Read: 500000, Total Rows Processed: 9000000, Total Chunk Time: 1.409 seconds Rows Read: 500000, Total Rows Processed: 9500000, Total Chunk Time: 1.422 seconds Rows Read: 500000, Total Rows Processed: 10000000, Total Chunk Time: 1.384 seconds >

结束后,我们利用rxGetInfo函数查看.xdf文件的数据结构(将参数getVarInfo设置为TRUE),并查看数据的前十行(numRows = 10)。

> rxGetInfo("ccFraud.xdf",getVarInfo = TRUE,numRows = 10) File name: C:\Program Files\Microsoft\R Server\R_SERVER\library\RevoScaleR\rxLibs\x64\ccFraud.xdf Number of observations: 1e+07 Number of variables: 9 Number of blocks: 20 Compression type: zlib Variable information: Var 1: custID, Type: integer, Low/High: (1, 1e+07) Var 2: gender, Type: integer, Low/High: (1, 2) Var 3: state, Type: integer, Low/High: (1, 51) Var 4: cardholder, Type: integer, Low/High: (1, 2) Var 5: balance, Type: integer, Low/High: (0, 41485) Var 6: numTrans, Type: integer, Low/High: (0, 100) Var 7: numIntlTrans, Type: integer, Low/High: (0, 60) Var 8: creditLine, Type: integer, Low/High: (1, 75) Var 9: fraudRisk, Type: integer, Low/High: (0, 1) Data (10 rows starting with row 1): custID gender state cardholder balance numTrans numIntlTrans creditLine fraudRisk 1 1 1 35 1 3000 4 14 2 0 2 2 2 2 1 0 9 0 18 0 3 3 2 2 1 0 27 9 16 0 4 4 1 15 1 0 12 0 5 0 5 5 1 46 1 0 11 16 7 0 6 6 2 44 2 5546 21 0 13 0 7 7 1 3 1 2000 41 0 1 0 8 8 1 10 1 6016 20 3 6 0 9 9 2 32 1 2428 4 10 22 0 10 10 1 23 1 0 18 56 5 0 > 我们也可以在保存.xdf文件时,利用stringsAsFactors,colClasses,和colInfo等参数改变变量的数据类型。比如我们利用colInfo将变量gender从数值型变为因子型,且因子水平为“F”、“M”,利用colClasses将变量fraudRisk从数值型变成因子型。

> # 改变变量的数据存储类型 > ccFraud_xdf <- rxImport(inData = infile, + outFile = "ccFraud.xdf", + colClasses = c(fraudRisk = "factor"), + colInfo = list("gender" = list(type = "factor", + levels = c("1","2"), + newLevels = c("F","M"))), + overwrite = TRUE) Rows Read: 500000, Total Rows Processed: 500000, Total Chunk Time: 1.871 seconds Rows Read: 500000, Total Rows Processed: 1000000, Total Chunk Time: 1.832 seconds Rows Read: 500000, Total Rows Processed: 1500000, Total Chunk Time: 1.825 seconds Rows Read: 500000, Total Rows Processed: 2000000, Total Chunk Time: 1.808 seconds Rows Read: 500000, Total Rows Processed: 2500000, Total Chunk Time: 2.018 seconds Rows Read: 500000, Total Rows Processed: 3000000, Total Chunk Time: 2.061 seconds Rows Read: 500000, Total Rows Processed: 3500000, Total Chunk Time: 2.158 seconds Rows Read: 500000, Total Rows Processed: 4000000, Total Chunk Time: 1.917 seconds Rows Read: 500000, Total Rows Processed: 4500000, Total Chunk Time: 1.852 seconds Rows Read: 500000, Total Rows Processed: 5000000, Total Chunk Time: 1.795 seconds Rows Read: 500000, Total Rows Processed: 5500000, Total Chunk Time: 1.829 seconds Rows Read: 500000, Total Rows Processed: 6000000, Total Chunk Time: 1.793 seconds Rows Read: 500000, Total Rows Processed: 6500000, Total Chunk Time: 1.849 seconds Rows Read: 500000, Total Rows Processed: 7000000, Total Chunk Time: 1.806 seconds Rows Read: 500000, Total Rows Processed: 7500000, Total Chunk Time: 1.773 seconds Rows Read: 500000, Total Rows Processed: 8000000, Total Chunk Time: 1.813 seconds Rows Read: 500000, Total Rows Processed: 8500000, Total Chunk Time: 1.812 seconds Rows Read: 500000, Total Rows Processed: 9000000, Total Chunk Time: 1.850 seconds Rows Read: 500000, Total Rows Processed: 9500000, Total Chunk Time: 1.824 seconds Rows Read: 500000, Total Rows Processed: 10000000, Total Chunk Time: 1.828 seconds > > # 查看ccFraud_xdf的数据结构 > rxGetInfo(ccFraud_xdf,getVarInfo = TRUE,numRows = 5) File name: C:\Program Files\Microsoft\R Server\R_SERVER\library\RevoScaleR\rxLibs\x64\ccFraud.xdf Number of observations: 1e+07 Number of variables: 9 Number of blocks: 20 Compression type: zlib Variable information: Var 1: custID, Type: integer, Low/High: (1, 1e+07) Var 2: gender 2 factor levels: F M Var 3: state, Type: integer, Low/High: (1, 51) Var 4: cardholder, Type: integer, Low/High: (1, 2) Var 5: balance, Type: integer, Low/High: (0, 41485) Var 6: numTrans, Type: integer, Low/High: (0, 100) Var 7: numIntlTrans, Type: integer, Low/High: (0, 60) Var 8: creditLine, Type: integer, Low/High: (1, 75) Var 9: fraudRisk 2 factor levels: 0 1 Data (5 rows starting with row 1): custID gender state cardholder balance numTrans numIntlTrans creditLine fraudRisk 1 1 F 35 1 3000 4 14 2 0 2 2 M 2 1 0 9 0 18 0 3 3 M 2 1 0 27 9 16 0 4 4 F 15 1 0 12 0 5 0 5 5 F 46 1 0 11 16 7 0 > 从数据结构可知,变量的类型已经发生改变,且gender的因子水平从1、2变成F、M。

我们也可以对.xdf文件进行描述性统计分析,通过rxSummary函数实现。

> # 利用rxSummary函数对数据进行描述性统计分析 > rxSummary(~.,ccFraud_xdf) # 对全部变量进行统计 Rows Read: 500000, Total Rows Processed: 500000, Total Chunk Time: 0.069 seconds Rows Read: 500000, Total Rows Processed: 1000000, Total Chunk Time: 0.072 seconds Rows Read: 500000, Total Rows Processed: 1500000, Total Chunk Time: 0.078 seconds Rows Read: 500000, Total Rows Processed: 2000000, Total Chunk Time: 0.079 seconds Rows Read: 500000, Total Rows Processed: 2500000, Total Chunk Time: 0.080 seconds Rows Read: 500000, Total Rows Processed: 3000000, Total Chunk Time: 0.081 seconds Rows Read: 500000, Total Rows Processed: 3500000, Total Chunk Time: 0.081 seconds Rows Read: 500000, Total Rows Processed: 4000000, Total Chunk Time: 0.080 seconds Rows Read: 500000, Total Rows Processed: 4500000, Total Chunk Time: 0.077 seconds Rows Read: 500000, Total Rows Processed: 5000000, Total Chunk Time: 0.080 seconds Rows Read: 500000, Total Rows Processed: 5500000, Total Chunk Time: 0.080 seconds Rows Read: 500000, Total Rows Processed: 6000000, Total Chunk Time: 0.085 seconds Rows Read: 500000, Total Rows Processed: 6500000, Total Chunk Time: 0.080 seconds Rows Read: 500000, Total Rows Processed: 7000000, Total Chunk Time: 0.078 seconds Rows Read: 500000, Total Rows Processed: 7500000, Total Chunk Time: 0.082 seconds Rows Read: 500000, Total Rows Processed: 8000000, Total Chunk Time: 0.084 seconds Rows Read: 500000, Total Rows Processed: 8500000, Total Chunk Time: 0.082 seconds Rows Read: 500000, Total Rows Processed: 9000000, Total Chunk Time: 0.079 seconds Rows Read: 500000, Total Rows Processed: 9500000, Total Chunk Time: 0.086 seconds Rows Read: 500000, Total Rows Processed: 10000000, Total Chunk Time: 0.078 seconds Computation time: 1.661 seconds. Call: rxSummary(formula = ~., data = ccFraud_xdf) Summary Statistics Results for: ~. Data: ccFraud_xdf (RxXdfData Data Source) File name: ccFraud.xdf Number of valid observations: 1e+07 Name Mean StdDev Min Max ValidObs MissingObs custID 5.000001e+06 2.886751e+06 1 10000000 1e+07 0 state 2.466127e+01 1.497012e+01 1 51 1e+07 0 cardholder 1.030004e+00 1.705991e-01 1 2 1e+07 0 balance 4.109920e+03 3.996847e+03 0 41485 1e+07 0 numTrans 2.893519e+01 2.655378e+01 0 100 1e+07 0 numIntlTrans 4.047190e+00 8.602970e+00 0 60 1e+07 0 creditLine 9.134469e+00 9.641974e+00 1 75 1e+07 0 Category Counts for gender Number of categories: 2 Number of valid observations: 1e+07 Number of missing observations: 0 gender Counts F 6178231 M 3821769 Category Counts for fraudRisk Number of categories: 2 Number of valid observations: 1e+07 Number of missing observations: 0 fraudRisk Counts 0 9403986 1 596014 >

跟普通summary函数相似,对数值型变量返回平均值、标准差、最小值、最大值、样本个数和缺失值个数,对因子型变量则返回频数。

除了这些简单的处理外,ScaleR也包含了丰富的数据处理和算法,具体如下所示:

最后,让我们利用rxLogit函数构建Logistic回归模型(R中的glm函数也适用),并利用summary函数查看模型信息。

> # logitic回归模型 > ccFraudglm <- rxLogit(fraudRisk ~ gender + cardholder + balance + numTrans + + numIntlTrans + creditLine,data = ccFraud_xdf) Rows Read: 500000, Total Rows Processed: 500000, Total Chunk Time: 0.080 seconds Rows Read: 500000, Total Rows Processed: 1000000, Total Chunk Time: 0.081 seconds Rows Read: 500000, Total Rows Processed: 1500000, Total Chunk Time: 0.080 seconds Rows Read: 500000, Total Rows Processed: 2000000, Total Chunk Time: 0.071 seconds Rows Read: 500000, Total Rows Processed: 2500000, Total Chunk Time: 0.071 seconds Rows Read: 500000, Total Rows Processed: 3000000, Total Chunk Time: 0.068 seconds Rows Read: 500000, Total Rows Processed: 3500000, Total Chunk Time: 0.075 seconds Rows Read: 500000, Total Rows Processed: 4000000, Total Chunk Time: 0.070 seconds Rows Read: 500000, Total Rows Processed: 4500000, Total Chunk Time: 0.075 seconds Rows Read: 500000, Total Rows Processed: 5000000, Total Chunk Time: 0.069 seconds Rows Read: 500000, Total Rows Processed: 5500000, Total Chunk Time: 0.073 seconds Rows Read: 500000, Total Rows Processed: 6000000, Total Chunk Time: 0.072 seconds Rows Read: 500000, Total Rows Processed: 6500000, Total Chunk Time: 0.067 seconds Rows Read: 500000, Total Rows Processed: 7000000, Total Chunk Time: 0.077 seconds Rows Read: 500000, Total Rows Processed: 7500000, Total Chunk Time: 0.074 seconds Rows Read: 500000, Total Rows Processed: 8000000, Total Chunk Time: 0.073 seconds Rows Read: 500000, Total Rows Processed: 8500000, Total Chunk Time: 0.070 seconds Rows Read: 500000, Total Rows Processed: 9000000, Total Chunk Time: 0.077 seconds Rows Read: 500000, Total Rows Processed: 9500000, Total Chunk Time: 0.069 seconds Rows Read: 500000, Total Rows Processed: 10000000, Total Chunk Time: 0.071 seconds Starting values (iteration 1) time: 1.558 secs. Rows Read: 500000, Total Rows Processed: 500000, Total Chunk Time: 0.061 seconds Rows Read: 500000, Total Rows Processed: 1000000, Total Chunk Time: 0.182 seconds Rows Read: 500000, Total Rows Processed: 1500000, Total Chunk Time: 0.193 seconds Rows Read: 500000, Total Rows Processed: 2000000, Total Chunk Time: 0.184 seconds Rows Read: 500000, Total Rows Processed: 2500000, Total Chunk Time: 0.190 seconds Rows Read: 500000, Total Rows Processed: 3000000, Total Chunk Time: 0.193 seconds Rows Read: 500000, Total Rows Processed: 3500000, Total Chunk Time: 0.193 seconds Rows Read: 500000, Total Rows Processed: 4000000, Total Chunk Time: 0.192 seconds Rows Read: 500000, Total Rows Processed: 4500000, Total Chunk Time: 0.188 seconds Rows Read: 500000, Total Rows Processed: 5000000, Total Chunk Time: 0.197 seconds Rows Read: 500000, Total Rows Processed: 5500000, Total Chunk Time: 0.188 seconds Rows Read: 500000, Total Rows Processed: 6000000, Total Chunk Time: 0.195 seconds Rows Read: 500000, Total Rows Processed: 6500000, Total Chunk Time: 0.195 seconds Rows Read: 500000, Total Rows Processed: 7000000, Total Chunk Time: 0.200 seconds Rows Read: 500000, Total Rows Processed: 7500000, Total Chunk Time: 0.188 seconds Rows Read: 500000, Total Rows Processed: 8000000, Total Chunk Time: 0.193 seconds Rows Read: 500000, Total Rows Processed: 8500000, Total Chunk Time: 0.185 seconds Rows Read: 500000, Total Rows Processed: 9000000, Total Chunk Time: 0.186 seconds Rows Read: 500000, Total Rows Processed: 9500000, Total Chunk Time: 0.196 seconds Rows Read: 500000, Total Rows Processed: 10000000, Total Chunk Time: 0.192 seconds Iteration 2 time: 3.866 secs. Rows Read: 500000, Total Rows Processed: 500000, Total Chunk Time: 0.070 seconds Rows Read: 500000, Total Rows Processed: 1000000, Total Chunk Time: 0.189 seconds Rows Read: 500000, Total Rows Processed: 1500000, Total Chunk Time: 0.195 seconds Rows Read: 500000, Total Rows Processed: 2000000, Total Chunk Time: 0.205 seconds Rows Read: 500000, Total Rows Processed: 2500000, Total Chunk Time: 0.194 seconds Rows Read: 500000, Total Rows Processed: 3000000, Total Chunk Time: 0.188 seconds Rows Read: 500000, Total Rows Processed: 3500000, Total Chunk Time: 0.199 seconds Rows Read: 500000, Total Rows Processed: 4000000, Total Chunk Time: 0.194 seconds Rows Read: 500000, Total Rows Processed: 4500000, Total Chunk Time: 0.199 seconds Rows Read: 500000, Total Rows Processed: 5000000, Total Chunk Time: 0.194 seconds Rows Read: 500000, Total Rows Processed: 5500000, Total Chunk Time: 0.187 seconds Rows Read: 500000, Total Rows Processed: 6000000, Total Chunk Time: 0.191 seconds Rows Read: 500000, Total Rows Processed: 6500000, Total Chunk Time: 0.190 seconds Rows Read: 500000, Total Rows Processed: 7000000, Total Chunk Time: 0.195 seconds Rows Read: 500000, Total Rows Processed: 7500000, Total Chunk Time: 0.184 seconds Rows Read: 500000, Total Rows Processed: 8000000, Total Chunk Time: 0.201 seconds Rows Read: 500000, Total Rows Processed: 8500000, Total Chunk Time: 0.188 seconds Rows Read: 500000, Total Rows Processed: 9000000, Total Chunk Time: 0.208 seconds Rows Read: 500000, Total Rows Processed: 9500000, Total Chunk Time: 0.216 seconds Rows Read: 500000, Total Rows Processed: 10000000, Total Chunk Time: 0.191 seconds Iteration 3 time: 3.950 secs. Rows Read: 500000, Total Rows Processed: 500000, Total Chunk Time: 0.068 seconds Rows Read: 500000, Total Rows Processed: 1000000, Total Chunk Time: 0.197 seconds Rows Read: 500000, Total Rows Processed: 1500000, Total Chunk Time: 0.191 seconds Rows Read: 500000, Total Rows Processed: 2000000, Total Chunk Time: 0.191 seconds Rows Read: 500000, Total Rows Processed: 2500000, Total Chunk Time: 0.187 seconds Rows Read: 500000, Total Rows Processed: 3000000, Total Chunk Time: 0.190 seconds Rows Read: 500000, Total Rows Processed: 3500000, Total Chunk Time: 0.192 seconds Rows Read: 500000, Total Rows Processed: 4000000, Total Chunk Time: 0.201 seconds Rows Read: 500000, Total Rows Processed: 4500000, Total Chunk Time: 0.193 seconds Rows Read: 500000, Total Rows Processed: 5000000, Total Chunk Time: 0.190 seconds Rows Read: 500000, Total Rows Processed: 5500000, Total Chunk Time: 0.193 seconds Rows Read: 500000, Total Rows Processed: 6000000, Total Chunk Time: 0.211 seconds Rows Read: 500000, Total Rows Processed: 6500000, Total Chunk Time: 0.191 seconds Rows Read: 500000, Total Rows Processed: 7000000, Total Chunk Time: 0.189 seconds Rows Read: 500000, Total Rows Processed: 7500000, Total Chunk Time: 0.199 seconds Rows Read: 500000, Total Rows Processed: 8000000, Total Chunk Time: 0.198 seconds Rows Read: 500000, Total Rows Processed: 8500000, Total Chunk Time: 0.190 seconds Rows Read: 500000, Total Rows Processed: 9000000, Total Chunk Time: 0.188 seconds Rows Read: 500000, Total Rows Processed: 9500000, Total Chunk Time: 0.192 seconds Rows Read: 500000, Total Rows Processed: 10000000, Total Chunk Time: 0.181 seconds Iteration 4 time: 3.914 secs. Rows Read: 500000, Total Rows Processed: 500000, Total Chunk Time: 0.061 seconds Rows Read: 500000, Total Rows Processed: 1000000, Total Chunk Time: 0.191 seconds Rows Read: 500000, Total Rows Processed: 1500000, Total Chunk Time: 0.197 seconds Rows Read: 500000, Total Rows Processed: 2000000, Total Chunk Time: 0.201 seconds Rows Read: 500000, Total Rows Processed: 2500000, Total Chunk Time: 0.199 seconds Rows Read: 500000, Total Rows Processed: 3000000, Total Chunk Time: 0.189 seconds Rows Read: 500000, Total Rows Processed: 3500000, Total Chunk Time: 0.204 seconds Rows Read: 500000, Total Rows Processed: 4000000, Total Chunk Time: 0.192 seconds Rows Read: 500000, Total Rows Processed: 4500000, Total Chunk Time: 0.196 seconds Rows Read: 500000, Total Rows Processed: 5000000, Total Chunk Time: 0.202 seconds Rows Read: 500000, Total Rows Processed: 5500000, Total Chunk Time: 0.194 seconds Rows Read: 500000, Total Rows Processed: 6000000, Total Chunk Time: 0.185 seconds Rows Read: 500000, Total Rows Processed: 6500000, Total Chunk Time: 0.188 seconds Rows Read: 500000, Total Rows Processed: 7000000, Total Chunk Time: 0.195 seconds Rows Read: 500000, Total Rows Processed: 7500000, Total Chunk Time: 0.207 seconds Rows Read: 500000, Total Rows Processed: 8000000, Total Chunk Time: 0.199 seconds Rows Read: 500000, Total Rows Processed: 8500000, Total Chunk Time: 0.192 seconds Rows Read: 500000, Total Rows Processed: 9000000, Total Chunk Time: 0.186 seconds Rows Read: 500000, Total Rows Processed: 9500000, Total Chunk Time: 0.202 seconds Rows Read: 500000, Total Rows Processed: 10000000, Total Chunk Time: 0.209 seconds Iteration 5 time: 3.963 secs. Rows Read: 500000, Total Rows Processed: 500000, Total Chunk Time: 0.066 seconds Rows Read: 500000, Total Rows Processed: 1000000, Total Chunk Time: 0.191 seconds Rows Read: 500000, Total Rows Processed: 1500000, Total Chunk Time: 0.198 seconds Rows Read: 500000, Total Rows Processed: 2000000, Total Chunk Time: 0.187 seconds Rows Read: 500000, Total Rows Processed: 2500000, Total Chunk Time: 0.191 seconds Rows Read: 500000, Total Rows Processed: 3000000, Total Chunk Time: 0.200 seconds Rows Read: 500000, Total Rows Processed: 3500000, Total Chunk Time: 0.189 seconds Rows Read: 500000, Total Rows Processed: 4000000, Total Chunk Time: 0.197 seconds Rows Read: 500000, Total Rows Processed: 4500000, Total Chunk Time: 0.187 seconds Rows Read: 500000, Total Rows Processed: 5000000, Total Chunk Time: 0.197 seconds Rows Read: 500000, Total Rows Processed: 5500000, Total Chunk Time: 0.187 seconds Rows Read: 500000, Total Rows Processed: 6000000, Total Chunk Time: 0.189 seconds Rows Read: 500000, Total Rows Processed: 6500000, Total Chunk Time: 0.188 seconds Rows Read: 500000, Total Rows Processed: 7000000, Total Chunk Time: 0.190 seconds Rows Read: 500000, Total Rows Processed: 7500000, Total Chunk Time: 0.208 seconds Rows Read: 500000, Total Rows Processed: 8000000, Total Chunk Time: 0.204 seconds Rows Read: 500000, Total Rows Processed: 8500000, Total Chunk Time: 0.184 seconds Rows Read: 500000, Total Rows Processed: 9000000, Total Chunk Time: 0.193 seconds Rows Read: 500000, Total Rows Processed: 9500000, Total Chunk Time: 0.186 seconds Rows Read: 500000, Total Rows Processed: 10000000, Total Chunk Time: 0.197 seconds Iteration 6 time: 3.903 secs. Rows Read: 500000, Total Rows Processed: 500000, Total Chunk Time: 0.061 seconds Rows Read: 500000, Total Rows Processed: 1000000, Total Chunk Time: 0.192 seconds Rows Read: 500000, Total Rows Processed: 1500000, Total Chunk Time: 0.198 seconds Rows Read: 500000, Total Rows Processed: 2000000, Total Chunk Time: 0.198 seconds Rows Read: 500000, Total Rows Processed: 2500000, Total Chunk Time: 0.204 seconds Rows Read: 500000, Total Rows Processed: 3000000, Total Chunk Time: 0.193 seconds Rows Read: 500000, Total Rows Processed: 3500000, Total Chunk Time: 0.196 seconds Rows Read: 500000, Total Rows Processed: 4000000, Total Chunk Time: 0.201 seconds Rows Read: 500000, Total Rows Processed: 4500000, Total Chunk Time: 0.197 seconds Rows Read: 500000, Total Rows Processed: 5000000, Total Chunk Time: 0.195 seconds Rows Read: 500000, Total Rows Processed: 5500000, Total Chunk Time: 0.181 seconds Rows Read: 500000, Total Rows Processed: 6000000, Total Chunk Time: 0.193 seconds Rows Read: 500000, Total Rows Processed: 6500000, Total Chunk Time: 0.198 seconds Rows Read: 500000, Total Rows Processed: 7000000, Total Chunk Time: 0.194 seconds Rows Read: 500000, Total Rows Processed: 7500000, Total Chunk Time: 0.199 seconds Rows Read: 500000, Total Rows Processed: 8000000, Total Chunk Time: 0.191 seconds Rows Read: 500000, Total Rows Processed: 8500000, Total Chunk Time: 0.192 seconds Rows Read: 500000, Total Rows Processed: 9000000, Total Chunk Time: 0.201 seconds Rows Read: 500000, Total Rows Processed: 9500000, Total Chunk Time: 0.198 seconds Rows Read: 500000, Total Rows Processed: 10000000, Total Chunk Time: 0.201 seconds Iteration 7 time: 3.956 secs. Rows Read: 500000, Total Rows Processed: 500000, Total Chunk Time: 0.060 seconds Rows Read: 500000, Total Rows Processed: 1000000, Total Chunk Time: 0.189 seconds Rows Read: 500000, Total Rows Processed: 1500000, Total Chunk Time: 0.199 seconds Rows Read: 500000, Total Rows Processed: 2000000, Total Chunk Time: 0.215 seconds Rows Read: 500000, Total Rows Processed: 2500000, Total Chunk Time: 0.231 seconds Rows Read: 500000, Total Rows Processed: 3000000, Total Chunk Time: 0.220 seconds Rows Read: 500000, Total Rows Processed: 3500000, Total Chunk Time: 0.202 seconds Rows Read: 500000, Total Rows Processed: 4000000, Total Chunk Time: 0.195 seconds Rows Read: 500000, Total Rows Processed: 4500000, Total Chunk Time: 0.213 seconds Rows Read: 500000, Total Rows Processed: 5000000, Total Chunk Time: 0.211 seconds Rows Read: 500000, Total Rows Processed: 5500000, Total Chunk Time: 0.191 seconds Rows Read: 500000, Total Rows Processed: 6000000, Total Chunk Time: 0.188 seconds Rows Read: 500000, Total Rows Processed: 6500000, Total Chunk Time: 0.209 seconds Rows Read: 500000, Total Rows Processed: 7000000, Total Chunk Time: 0.190 seconds Rows Read: 500000, Total Rows Processed: 7500000, Total Chunk Time: 0.195 seconds Rows Read: 500000, Total Rows Processed: 8000000, Total Chunk Time: 0.202 seconds Rows Read: 500000, Total Rows Processed: 8500000, Total Chunk Time: 0.222 seconds Rows Read: 500000, Total Rows Processed: 9000000, Total Chunk Time: 0.224 seconds Rows Read: 500000, Total Rows Processed: 9500000, Total Chunk Time: 0.213 seconds Rows Read: 500000, Total Rows Processed: 10000000, Total Chunk Time: 0.223 seconds Iteration 8 time: 4.194 secs. Elapsed computation time: 29.316 secs. > # 查看模型结果 > summary(ccFraudglm) Call: rxLogit(formula = fraudRisk ~ gender + cardholder + balance + numTrans + numIntlTrans + creditLine, data = ccFraud_xdf) Logistic Regression Results for: fraudRisk ~ gender + cardholder + balance + numTrans + numIntlTrans + creditLine Data: ccFraud_xdf (RxXdfData Data Source) File name: ccFraud.xdf Dependent variable(s): fraudRisk Total independent variables: 8 (Including number dropped: 1) Number of valid observations: 1e+07 Number of missing observations: 0 -2*LogLikelihood: 2149329.7462 (Residual deviance on 9999993 degrees of freedom) Coefficients: Estimate Std. Error z value Pr(>|z|) (Intercept) -8.888e+00 1.292e-02 -687.88 2.22e-16 *** gender=F -6.010e-01 3.716e-03 -161.73 2.22e-16 *** gender=M Dropped Dropped Dropped Dropped cardholder 4.703e-01 9.749e-03 48.24 2.22e-16 *** balance 3.755e-04 4.558e-07 823.71 2.22e-16 *** numTrans 4.659e-02 6.526e-05 713.86 2.22e-16 *** numIntlTrans 2.967e-02 1.757e-04 168.83 2.22e-16 *** creditLine 9.297e-02 1.389e-04 669.13 2.22e-16 *** --- Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 Condition number of final variance-covariance matrix: 5.5717 Number of iterations: 8 >

模型建好后,可以利用rxPredict函数可以对数据进行预测。

> # 利用logit模型进行预测 > rxPredict(ccFraudglm,data = ccFraud_xdf, + outData = ccFraud_xdf, overwrite=TRUE) Rows Read: 500000, Total Rows Processed: 500000, Total Chunk Time: 0.116 seconds Rows Read: 500000, Total Rows Processed: 1000000, Total Chunk Time: 0.271 seconds Rows Read: 500000, Total Rows Processed: 1500000, Total Chunk Time: 0.284 seconds Rows Read: 500000, Total Rows Processed: 2000000, Total Chunk Time: 0.268 seconds Rows Read: 500000, Total Rows Processed: 2500000, Total Chunk Time: 0.270 seconds Rows Read: 500000, Total Rows Processed: 3000000, Total Chunk Time: 0.268 seconds Rows Read: 500000, Total Rows Processed: 3500000, Total Chunk Time: 0.297 seconds Rows Read: 500000, Total Rows Processed: 4000000, Total Chunk Time: 0.269 seconds Rows Read: 500000, Total Rows Processed: 4500000, Total Chunk Time: 0.271 seconds Rows Read: 500000, Total Rows Processed: 5000000, Total Chunk Time: 0.273 seconds Rows Read: 500000, Total Rows Processed: 5500000, Total Chunk Time: 0.302 seconds Rows Read: 500000, Total Rows Processed: 6000000, Total Chunk Time: 0.287 seconds Rows Read: 500000, Total Rows Processed: 6500000, Total Chunk Time: 0.270 seconds Rows Read: 500000, Total Rows Processed: 7000000, Total Chunk Time: 0.279 seconds Rows Read: 500000, Total Rows Processed: 7500000, Total Chunk Time: 0.291 seconds Rows Read: 500000, Total Rows Processed: 8000000, Total Chunk Time: 0.271 seconds Rows Read: 500000, Total Rows Processed: 8500000, Total Chunk Time: 0.284 seconds Rows Read: 500000, Total Rows Processed: 9000000, Total Chunk Time: 0.305 seconds Rows Read: 500000, Total Rows Processed: 9500000, Total Chunk Time: 0.280 seconds Rows Read: 500000, Total Rows Processed: 10000000, Total Chunk Time: 0.278 seconds > > # 查看预测结果的前十条记录 > rxGetInfo(ccFraud_xdf,numRows = 10) File name: C:\Program Files\Microsoft\R Server\R_SERVER\library\RevoScaleR\rxLibs\x64\ccFraud.xdf Number of observations: 1e+07 Number of variables: 10 Number of blocks: 20 Compression type: zlib Data (10 rows starting with row 1): custID gender state cardholder balance numTrans numIntlTrans creditLine fraudRisk fraudRisk_Pred 1 1 F 35 1 3000 4 14 2 0 0.0008208775 2 2 M 2 1 0 9 0 18 0 0.0017884961 3 3 M 2 1 0 27 9 16 0 0.0044740019 4 4 F 15 1 0 12 0 5 0 0.0003372351 5 5 F 46 1 0 11 16 7 0 0.0006229633 6 6 M 44 2 5546 21 0 13 0 0.0246553959 7 7 F 3 1 2000 41 0 1 0 0.0018993247 8 8 F 10 1 6016 20 3 6 0 0.0055909255 9 9 M 32 1 2428 4 10 22 0 0.0068448406 10 10 F 23 1 0 18 56 5 0 0.0023438135

在数据的最后一列增加预测结果。

好了,今晚就先分享到这里,目的是让大家了解Microsoft R Server的一些基本用法。如果大家面临企业大数据难以分析建模的困境,可以下载安装MRS来尝试解决你们现实的业务问题。除了以上简单函数意外,微软也专门开发了一个MicrosoftML包,其提供了新的机器学习功能,具有更高的速度,性能和可扩展性,特别是处理大量的文本数据或高维分类数据。

感兴趣的读者可以去查看相关帮助文档。